One

of the major concerns in using SQL Azure is the security of data such as credit

card numbers, Social Security numbers, salaries, bonuses etc. The degree to

which data needs to be protected is to be determined by each business entity

but generally, on-site data is more secure than data stored in the cloud.

This is a simple example of using SQL Server Integration Services SIS and

SQL Server Reporting Services tools to accomplish just that.

We start off with this scenario: The fictitious

company SecureAce wants to place one of their Employee tables on SQL Azure, but

they do not want to keep any sensitive information such as employee salaries.

However from time to time they need to generate report of their employees and

salaries to management.

The solution to this scenario is divided

in two parts.

In the first part, the on-site data in the

employees table is partitioned in such a way that the sensitive information

stays on-site and the larger, non-sensitive data is stored on SQL Azure.

In the second part SSIS is used to bring

the two pieces of data together and load an Access database (on-site) which is used

as a front end for reporting information to management, an entirely realistic

way of data management. Although a Microsoft Access database is used, any other

destination handled by SSIS can also be used[s1] , such as another SQL Server database.

Herein we used MS Access as it is a very common product used in many small

businesses.

It

may be noted however that Microsoft is now supporting connecting SQL Azure to

MS Access directly, review this link for details: http://social.msdn.microsoft.com/Forums/en-US/ssdsgetstarted/thread/05dd7620-f209-43d2-8c41-63b251c62970. With the availability of Microsoft

Office Professional Plus 2010, the author was able to directly connect to SQL

Azure using an ODBC connection.

Splitting the data and uploading to SQL Azure

This is a preparation for the SSIS task

that follows. We will be using Northwind database’s Employee table and splitting it in two parts each

containing different columns, a vertical partition. One part will remain on

site which contains the salary information of employees and the other which is

loaded to SQL Azure will contain most of other information. In the Northwind database, the employee table

does not have a salary column and hence an extra column will be added for this

simulation. The procedure is described in the following[s2] steps[Maitreya3] .

·

Create

a table Employees in VerticalPart using the following statement:

CREATE TABLE [dbo].[Employees](

[EmployeeID] [int] PRIMARY KEY CLUSTERED NOT NULL,

[LastName] [nvarchar](20) NOT NULL,

[FirstName] [nvarchar](10) NOT NULL,

[HomePhone] [nvarchar](24) NULL,

[Extension] [nvarchar](4) NULL,

[Salary] [money] NULL

)

·

Use

Import / Export Wizard to populate the columns (except Salary) of the above

table using Northwind's Employees table

·

Modify

table by adding salary for each employee

[s6] [j7] There are only few employees and this

should not be a problem. When you want to save the table, you may not be able

to do so unless you have turned-on this option, in the Tools menu of SSMS. You

will get a reply after you save [s8] [j9] the Employees table as shown.

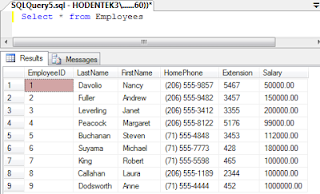

Now

run a SELECT query to verify that the salary column has been populated as shown.

Copy

the script for Northwind’s Employee table and modify it by changing the table

name and removing some columns resulting in the following statement:

CREATE TABLE [dbo].[AzureEmployees](

[EmployeeID] [int] PRIMARY KEY CLUSTERED NOT NULL,

[LastName] [nvarchar](20) NOT NULL,

[FirstName] [nvarchar](10) NOT NULL,

[Title] [nvarchar](30) NULL,

[TitleOfCourtesy] [nvarchar](25) NULL,

[HireDate] [datetime] NULL,

[Address] [nvarchar](60) NULL,

[City] [nvarchar](15) NULL,

[Region] [nvarchar](15) NULL,

[PostalCode] [nvarchar](10) NULL,

[Country] [nvarchar](15)

)

Note that the table name has been changed

to AzureEmployees. This is the table that will be stored in the Bluesky

database on SQL Azure.

Login

to SQL Azure and create the table in Bluesky database by running the above

create table statement.

The table will be created with the above

schema which you may verify in the Object Browser.

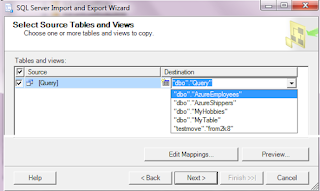

Use

Import and Export Wizard to populate the columns of AzureEmployees with data

from Northwind. Use the query option to move data from source to destination

using the following query.

SELECT EmployeeID, LastName, FirstName,

Title, TitleOfCourtesy, HireDate,

Address, City,Region, PostalCode,

Country

FROM

Employees

Save

the query results to the AzureEmployees table you created earlier as shown.

Follow

wizard’s steps to review data mapping as shown

Complete

the wizard steps as shown.

Verify

data in AzureEmployees in Bluesky database on SQL Azure by running a SELECT

statement.

By following the above we have created two

tables, one on-site and the other on SQL Azure.

Although data transformation of string

data types did not present any error due to string length it could present some

problems if the string length is over 8000 if the strings are of type varchar (max)

and text. In these cases just change them to nvarchar (max) to overcome the

problem. For details review the following link:

http://blogs.msdn.com/b/sqlazure/archive/2010/06/01/10018602.aspx

Merging data and loading an Access database

In this section we

will reconstruct the Employees table on-site by retrieving data from SQL Azure

as well as SQL Server’s VerticalPart database and merge them. After merging

them, we will place them in an MS Access database so that simple reports can be

authored.

In order to do

this we take the following steps.

- Click open BIDS from its shortcut.

- Create a Integration Services Project after providing a name for the project. Change the default name of the Package file.

The Project folder

should appear as shown in the next image. Project name and Package name were

provided.

- Drag and drop a Data Flow task to the Control Flow tabbed page of the package designer surface.

- In the bottom pane Connection Managers, configure connection managers one each for SQL Azure database; VerticalPart database on SQL Server 2008; and an MS Access database as shown.

The next image

shows the details of the connection manager Hodentek3\KUMO.VerticalPart. Note that SqlClient Data Provider is used. The SQL

Server Hodentek3\KUMO is configured for

Windows

Authentication.

This next image

shows the connection xxxxxxxxxx.database.windows.net.Bluesky.mysorian1

for the Bluesky database on SQL Azure. The

authentication information is the same one you have used so far and, if it is

correct you should be able to see the available databases.

- Create an MS Access database (Access 2003 format) and use it for this connection.

Later we also

create a table in this database to receive the merged fields from SQL Azure and

the on-site server.

For this

connection manager we use the following settings and verify by clicking the Test Connection button:

Provider: Native OLE DB\Microsoft Jet

4.0 OLE DB Provider

Database file is at: C:\Users\Jay\AccessSQLAzure.mdb

User name: Admin

Password: <empty>

It is assumed that

the reader has familiarity with using SSIS. The author recommends his own book

on SSIS for beginners, which may be found here: https://www.packtpub.com/sql-server-integration-services-visual-studio-2005/book.

Each of the above

connections can be tested using the Test Connection button on them.

Merging columns

from SQL Azure and SQL Server

You will use two

ADO.NET Source data flow sources, one each for SQL Azure and SQL Server. The

outputs will be merged.

- Add two ADO.NET data flow sources to the tabbed designer pane Data Flow.

- Rename the default names of the source components to read From SQL Azure Database and From SQL Server 2008 database.

- Configure the ADO.NET Source Editor connected to SQL Azure to display the following as shown in the next image.

ADO.NET Connection manager:

XXXXXXX.database.windows.net.Bluesky.mysorian1

Data access mode: Table or view

Name of the table or view:

"dbo"."AzureEmployees"

You must use the server name appropriate

for your SQL Azure instance.

Configured

as shown and you should be able to view the data in this table with the Preview…button.

- Configure the ADO.NET Source Editor connected to SQL Server to display the following as shown in the next image.

Use

the following details to configure From SQL Server 2008 database

source used in the ADO.NET Source Editor are as follows:

ADO.NET Connection manager:

Hodentek3\KUMO.Verticalpart

Data access mode: Table or view

Name of the table or view:

"dbo"."Employees"

Again

you should be able to view the data in this table with the Preview…button.

Sorting the

outputs of the sources

Since the data

coming at the exit point of the sources are not sorted it is important to get

the sorting correct and same in both sources before they can be merged.

- Drag and drop two Sort dataflow controls from the Toolbox to the design surface just below the ADO.NET data sources.

- Start with the one that is going to be receiving its input from the From SQL Azure Database source control.

- Click From SQL Azure Database and drag and drop the green dangling line on to the Sort control below it as shown.

- Double click the Sort control to display the Sort Transformation Editor and place a check mark for EmployeeID as shown.

- Repeat the same procedure for the From SQL Server 2008 Database source. Now we have two sort controls receiving their inputs from two source controls with outputs sorted.

- Drag and drop a Merge Join Data Flow Transformation from the Toolbox on to the design surface.

- Click the Sort data flow transformation on the left (connected to From SQL Azure Database) and drag and drop its green dangling line on to the Merge Join data flow transformation.

The Input Output Selection window will be

displayed as shown.

- Select the Merge Join Left Input and click OK.

- Repeat the same for the other Sort on the right (this time select Merge Join Right Output).

This

Merge control now merges the output from the two sort controls and provides a

merged output.

You still need to

configure the Merge

Join.

- Double click Merge Join to open the Merge Join Transformation editor page as shown.

Read

the instructions on this window.

- Place check mark for EmployeeID in both the Sort lists shown in the top pane. The bottom pane gets populated with Input columns and Output aliases. Make sure the join type is Left outer join as in the above image (use drop-down handle if needed).

We can add for

each flow path a Data Viewer so that we can monitor the flow of data at run

time by momentarily stopping the flow downstream. We are skipping this

diagnostic step.

Porting output

data from Merge Join to an MS Access Database

We will be using

the merged data from the two sources to fill up a table in an MS Access 2003

database.

- In the MS Access database you created while setting up the Connection Managers create a table, Salary Report table with the design parameters shown in the next image.

- Drag and drop an OLE DB Destination component from the Toolbox on to the package designer pane just underneath the Merge Join component.

- Drag and drop the green dangling line from Merge Join to the OLE DB Destination component.

- Double click the OLE DB Destination component to open its editor and fill in the details as follows:

OLEDB connection manager: AccessSQLAzure

Data access mode: Table or View

Name of the table or view: Salary Report

- Click Mappings to verify all the columns are present.

- Build the project and execute the package.

The

package elements turn yellow and later green indicating a successful run.

You

can verify the table in the access database for the transferred values. This

should have all the merged columns from the two databases. Note that in the

image, columns have been rearranged to move the Salary column into view.

This is an excerpt of Chapter 6 from my book:

Book published by http://www.packtpub.com/

Also take a look at my two other books published also by Packt:

No comments:

Post a Comment